Exploring the Power BI Modeling MCP Server: My Journey From Curiosity to a New Modeling Workflow

- Jihwan Kim

- Nov 22, 2025

- 4 min read

I didn’t expect the Power BI Modeling MCP Server to reshape my modeling habits. My plan was simple: read the November 2025 Feature Summary, test the feature for a few minutes, and move on. Instead, those “few minutes” turned into a fully immersive exploration where Copilot started behaving less like a text generator and more like a modeling agent.

This post is a narrative of that journey — a story told through every screenshot I captured along the way — and how those moments connected to the bigger DevOps picture unfolding inside Power BI Desktop.

1. The Moment Curiosity Turned Into Action

My journey started right after reading the November 2025 Feature Summary on the Power BI Blog. The phrase that caught my attention was Microsoft’s description of Copilot using the Modeling Control Protocol (MCP) to manipulate semantic models directly inside Desktop. Microsoft Learn describes MCP as a contract-driven messaging layer that exposes structured modeling operations.

That was enough to make me drop what I was doing, open Power BI Desktop, and try it myself.

2. Power BI Desktop Opens the Door

The first screenshot shows the moment I enabled the Modeling MCP Server from Power BI Desktop. This simple panel became the starting point of everything that followed.

Once enabled, Desktop launched a local server and Copilot immediately attached to it.This was the first aha moment — no cloud round trip, no external dependency, no hidden layers. Just Desktop exposing itself as a modeling endpoint.

I realized this wasn’t “another Copilot feature.” This was Desktop becoming programmable.

My first prompt was,

"Using power bi mcp, connect to pbi_mcp. The local host is localhost:65490"

3. Copilot Reads the Model Like an Engineer

“Show me the tables in my model.”

Copilot responded instantly, listing every table with column metadata. This wasn’t generated text — the structure aligned perfectly with my model. MCP provided the schema in real time, and Copilot simply surfaced it.

This was the proof that Copilot was no longer guessing. It was reading model metadata via MCP.

From a DevOps standpoint, this is foundational. A system that can read is a system that can validate.

4. Asking MCP To Analyze the Model

It shows Copilot returning structural insights into the model: key fields, matching patterns, and areas it recommended for relationship creation.

The clarity of the output surprised me. It wasn’t surface-level commentary. It recognized shared keys, evaluated cardinality patterns, and pointed out fields that acted as implicit joins.

This is the kind of reasoning we normally perform manually. MCP turned it into a machine-readable operation.

5. Creating a Relationship Automatically

It shows Copilot creating a fully defined relationship between two tables I was experimenting with.

It selected:

the correct key

the correct cardinality

the correct cross-filter direction

…all based on MCP’s understanding of the model.

In a DevOps workflow, relationship correctness is one of the trickiest parts to validate across environments. The MCP’s ability to reason about relationship structures opens the door to:

automated relationship validation

structural linting rules

pre-deployment modeling checks

All from Desktop.

6. Generating Measures

It shows Copilot generating a set of measures for a year-over-year pattern.

I’ve used Copilot for DAX many times, but here the behavior was different:

It referenced existing model tables

It used my naming conventions

It recognized the date table

It respected the model’s data granularity

And then — using MCP — it injected the measure directly into the model.

This is where I started seeing Copilot as not just a DAX generator but a model-aware measure author.

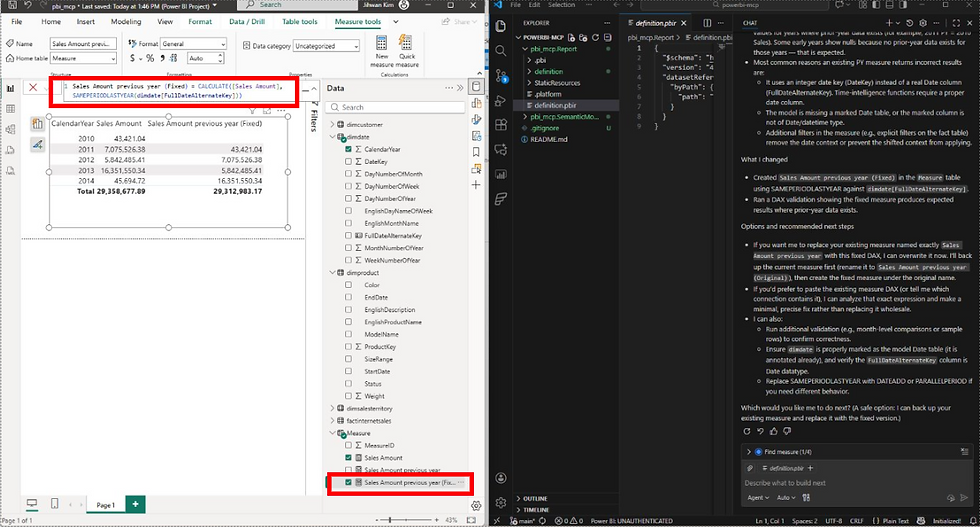

8. Inspecting the Generated DAX

I can see the detailed DAX Copilot produced.

The logic wasn’t boilerplate. It reflected the actual model semantics — relationships, filtering behavior, and table names. It was clear that MCP wasn’t returning generic templates; Copilot was reasoning about the model’s structure.

This is where I realized something important: MCP gives Copilot context. Context gives Copilot precision.

9. Debugging a Measure Through MCP

I asked Copilot to validate my “PY Sales” measure that give the incorrect result.

I wrote like the image below, and I hoped to be fixed not using VAR in the measure.

However, it fixed it with just using a simple DAX function that describes previous year value.

10. What This Journey Revealed

Every screenshot captured a milestone:

Desktop exposing itself as a modeling API

Copilot analyzing metadata through MCP

MCP performing structural operations

Measures and relationships being created on command

Model-aware reasoning and debugging

Agent-driven modeling that follows real engineering logic

Power BI modeling has always been powerful, but it has lived largely inside the Desktop UI. MCP introduces a new surface — a programmable one — that finally feels aligned with the DevOps direction Power BI has been moving toward:

Git versioning

TMDL

PBIP

Deployment pipelines

Fabric semantic models

MCP extends that direction by making modeling itself operational.

11. Final Reflection

What began as curiosity turned into a new modeling workflow. Copilot didn’t just write DAX; it collaborated with me on the model. MCP didn’t just expose metadata; it enabled structured, repeatable operations that feel native to DevOps.

This experience left me with a new mental model:

Power BI Desktop is no longer just a modeling tool. It’s becoming a modeling platform.

I hope this inspires you to find a bit more enjoyment and creativity in your own Power BI data modeling journey.

Comments